5 AI Chatbots Tested: Which One Works Best for Content Creators?

We tested 5 AI chatbots to discover which one works best as part of a content creator's workflow. Here are the results.

AI chatbots like ChatGPT have become ubiquitous and vital to how many knowledge workers, content creators, and business owners operate.

But as powerful as ChatGPT is, it’s not the only player in the game — and sometimes, exploring alternatives can reveal new ways to streamline your workflow.

That’s where this guide comes in.

Instead of just rating chatbots based on generic criteria like accuracy, speed, and creativity, I wanted to see how they perform in a real-world content workflow.

So, I paid for the subscriptions (courtesy of Buffer’s AI Tools stipend that will help me recoup those costs) and fed them data from my own social media, and gave a structured challenge to each one designed to evaluate how well they take existing inputs and turn them into meaningful insights.

Let’s dive into the details and determine which chatbot delivers the best results.

The experiment: How I tested the chatbots

There’s no shortage of AI chatbot comparisons online, but many focus on isolated prompts rather than real-world use cases — especially those relevant to you, our audience of creators, and small business owners.

To truly test how useful these tools are for creators, I needed a challenge that mirrored how we actually use AI in a content workflow.

So, instead of throwing random questions at each chatbot, I set up a structured experiment:

- I uploaded a CSV file with 60 days of my LinkedIn posts and their performance data—real engagement metrics from my latest posts.

- I gave each chatbot a series of interconnected prompts to see how well it could analyze, generate, and refine content.

- I kept everything within one continuous conversation, testing their ability to retain and apply context.

What I was testing for

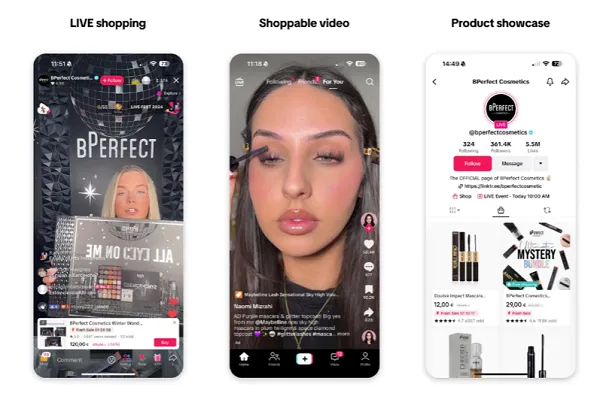

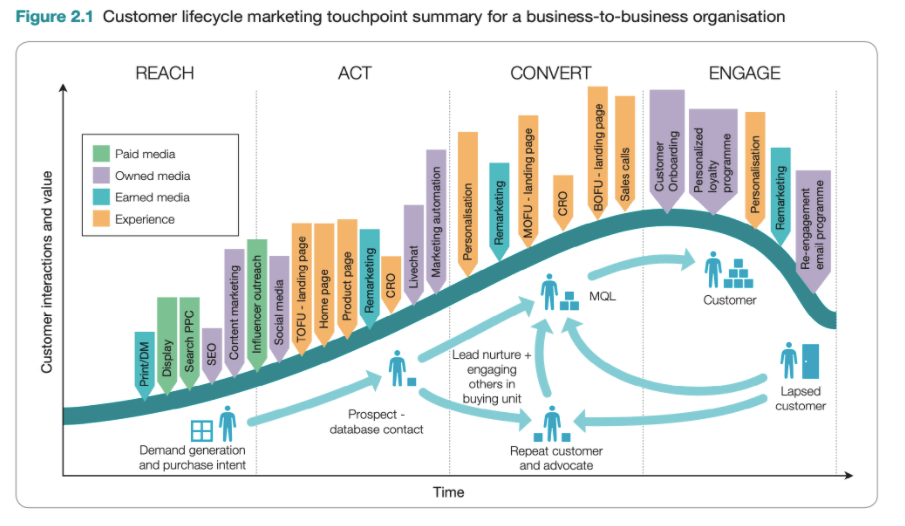

This test was designed to evaluate each chatbot across five key areas:

- Data analysis: Can it break down LinkedIn performance metrics and extract useful insights?

- Creativity & content generation: Can it generate fresh, non-generic (super important) content ideas based on real engagement data?

- Format adaptation: Can it transform a high-performing idea into multiple content formats (LinkedIn post, Twitter thread, short-form video script)?

- Strategic insights: Does it offer clear, actionable advice to improve content performance?

- Workflow optimization: Can it help streamline content planning, repurposing, and tracking?

.jpg)

![How Marketers Are Using AI for Writing [Survey]](https://www.growandconvert.com/wp-content/uploads/2025/03/ai-for-writing-1024x682.jpg)

![Best times to post on Facebook in 2025 [Updated March 2025]](https://media.sproutsocial.com/uploads/2024/04/Best-times-to-post_2024_feat-img_fb.jpg)

![311 Instagram caption ideas [plus free caption generator]](https://blog.hootsuite.com/wp-content/uploads/2022/07/instagram-captions-drive-engagement.png)

![How Conversion Funnels Create a Better Customer Journey [+ Tips to Optimize Yours]](https://www.hubspot.com/hubfs/Conversion%20Funnel.png)

![How to Create a Complete Marketing Strategy [Data + Expert Tips]](https://www.hubspot.com/hubfs/marketing-strategy.webp)