What PR Pros Can Learn from the AI Regulation Debate

They may sit on opposite ends of the political spectrum, but California Gov. Gavin Newsom and Rep. Marjorie Taylor Greene have found rare common ground: both are voicing concern over H.R. 1 — the so-called “One Big Beautiful Bill” — which proposes a 10-year freeze on state-level regulation of artificial intelligence. The language in question […] The post What PR Pros Can Learn from the AI Regulation Debate first appeared on PRsay.

They may sit on opposite ends of the political spectrum, but California Gov. Gavin Newsom and Rep. Marjorie Taylor Greene have found rare common ground: both are voicing concern over H.R. 1 — the so-called “One Big Beautiful Bill” — which proposes a 10-year freeze on state-level regulation of artificial intelligence.

The language in question comes from Section 43201 of H.R. 1, titled “moratorium.” It states:

“…no state or political subdivision may enforce, during the 10-year period beginning on the date of enactment of this act, any law or regulation of that state or subdivision limited, restricting or otherwise regulating artificial intelligence models, artificial intelligence systems or automated decision systems entered into interstate commerce.”

That provision has sparked alarm among an unlikely mix of critics, from politicians to tech executives, and PR professionals all warning of what happens when AI growth goes unchecked.

With a recent parliamentary ruling allowing the moratorium section of the bill to bypass the 60-vote threshold, the measure now faces a clearer path to passage with a simple majority. This occurs after the proposed legislation only narrowly passed the House of Representatives 215-214 May vote. The provision is drawing criticism from both sides of the aisles as lawmakers debate the implications for state’s rights.

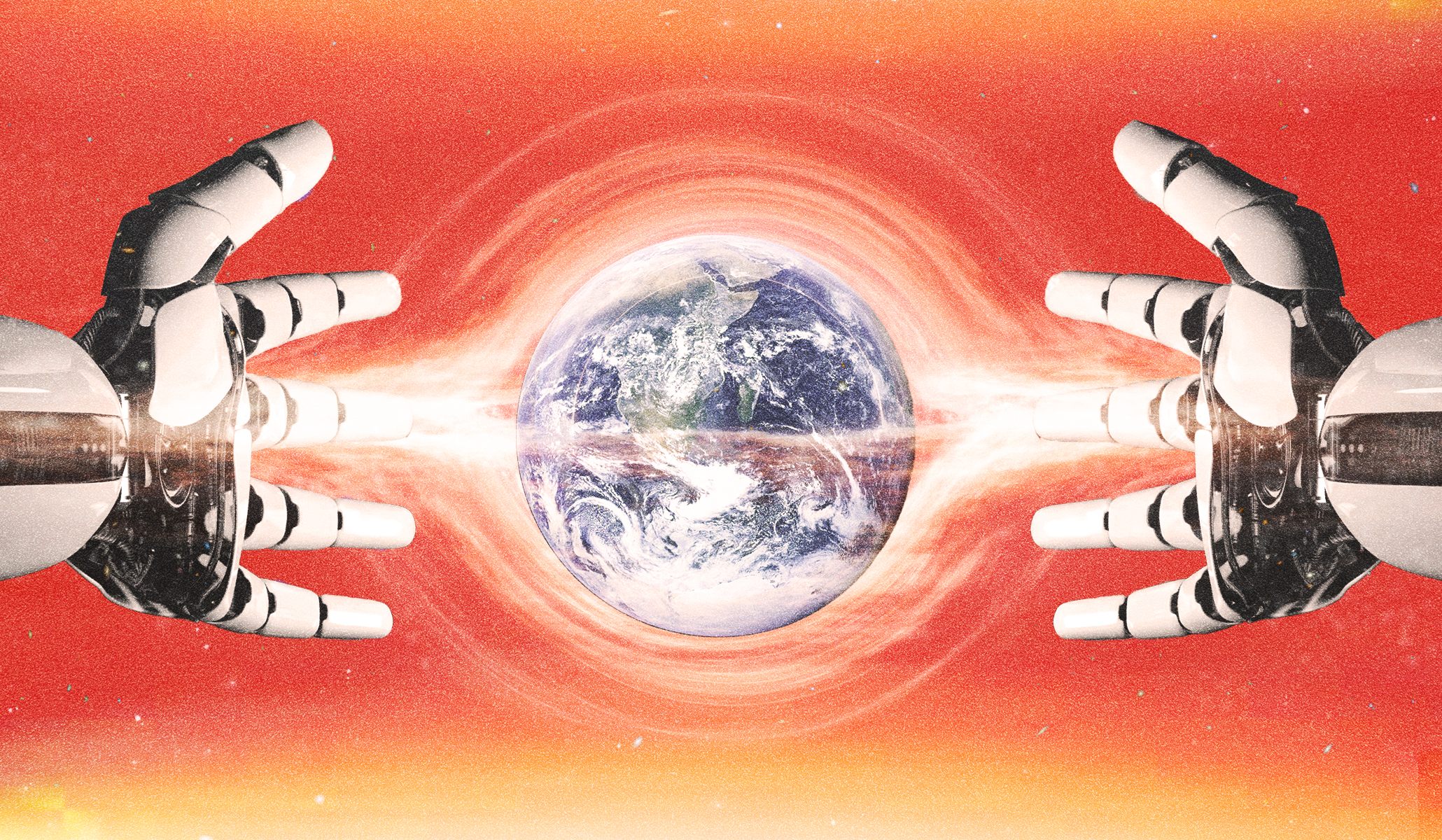

At first, this might sound like a legal or legislative debate. For communication professionals, it represents something potentially bigger: a dangerous precedent that limits accountability and clears the path for an accelerated expansion of generative AI tools already reshaping communications. Even if some of the language in H.R. 1 is revised, it forces a broader conversation about the role of state regulation and the ethical use of emerging technologies in mass communication. Luckily, we have been here before.

A communication refresher

If you sat through a media history class, then this should sound familiar. Every major leap in communication has brought incredible potential, but it has also led to backlash, confusion, and typically a delay in regulation.

Think back to the rise of mass-market newspapers. Printing technology made it easier to get information into people’s hands, but it also gave rise to yellow journalism. That particular era was marked by sensational headlines that sold papers by stirring public outrage. It took a war (Spanish-American) and a public reckoning before credibility and ethics returned to the industry. Radio and television changed everything about how people consumed news, formed opinions and made decisions. But regulation struggled to keep up. States pushing back shaped national standards like the Fairness Doctrine, regulated broadcasters covering controversial topics fairly and honestly.

Then came the internet. It connected the world while raising new questions about credibility and privacy, especially for kids. That pressure led to the Children’s Online Privacy Protection Act. Fast forward to social media in the 2000s and the Cambridge Analytica scandal. Millions of Facebook users’ data quietly scraped and used to influence public opinion and elections. The fallout helped jumpstart stronger protections, including the California Consumer Privacy Act.

Nearly every major advancement in mass media and communication technology has introduced both meaningful benefits and risks. With regard to Artificial Intelligence and the H.R. 1, the question is, with state’s hands tied, how quickly will AI evolve, and at what cost?

Making sense of what’s changing

Right now, AI-generated content is getting harder to spot. Text, video, images, even voice tools are advancing quickly, and the gap between synthetic and authentic is closing. Early complaints about AI in advertising or media often focused on the obvious glitches. Critics remember the six-fingered hands or weirdly smooth, amorphous faces that tipped off audiences. Those moments made it easy to spot the difference. When audiences could tell something was AI, they were less likely to trust it. That drop in perceived value hit brand credibility and loyalty. Those early glitches are now disappearing. That might sound like a green light for communication professionals eager to explore or lean into AI tools. But as the output gets smoother, the risks become harder to see and easier to ignore.

As with any rapidly evolving technology, ethical concerns often lag behind its adoption. AI tools can produce inaccurate content, or “hallucinations,” without warning. They can unintentionally reinforce race, gender or cultural bias. In the wrong hands, tools are used to generate deepfakes with harmful intent. These issues aren’t theoretical. They’re already shaping how the public sees brands, campaigns, and information itself.

The problem is that most communicators might be tempted to use AI tools as-is, right out of the box. When the tech companies building these systems are locked in a race to be the biggest, fastest, strongest, and the first, nuance and responsibility aren’t always their top priorities. The onus of responsibility shifts to the user. That’s part of the inspiration behind Dove’s Keep Beauty Real campaign, which includes an AI prompt playbook for anyone prompting image generators to produce depictions of women.

The role of the professional has to expand

As we move into an accelerated AI future, the focus will not just be about what AI can do. It’s about understanding what it can’t do, what it does poorly, and what it shouldn’t do at all. That education is critical, especially as regulation will continue to lag behind rapid updates and powerful new tools.

The responsibility of the practitioner will need to grow to compensate, and where professional organizations like PRSA, SPJ, IABC, and others will need to increase training, guidance and public education. The public will need support understanding what’s real, what’s not, and why that distinction can matter.

Slowing down to lead responsibly

As AI tools continue to evolve, communication professionals will need to lead with caution, clarity and a sense of responsibility. That means applying the ethical brakes where regulation has yet to catch up. With image, video, text and voice generators becoming more human-like, trust in media will depend on how thoughtfully these tools are integrated, in what is created and how it’s created.

Practitioners should begin by developing clear internal policies around AI use. This includes transparent disclosure practices, ongoing team training, and an active commitment to reducing harm.

Agencies, firms and practitioners must create environments where ethical AI use is the norm, not the exception. Industry groups must continue expanding their AI literacy efforts, offering members more tools and training to adapt responsibly. Higher education programs, too, have a role to play by preparing students in ethical AI use, and to think critically about its implications.

If history has taught us anything, it’s that waiting for regulation is not a strategy. Rather, times like these demand leadership. The future of communication will not be shaped by what AI can do, but by how we choose to use it.

Joshua J. Smith, Ph.D., is an assistant professor of public relations at Virginia Commonwealth University’s Robertson School of Communication.

Illustration credit: song_about_summer

The post What PR Pros Can Learn from the AI Regulation Debate first appeared on PRsay.

_1.jpg)

![AI Content Is 4.7x Cheaper Than Human Content [+ New Research Report]](https://ahrefs.com/blog/wp-content/uploads/2025/06/ai-content-is-4.7x-cheaper-than-by-ryan-law-data-studies.jpg)

![Brand and SEO Sitting on a Tree: K-I-S-S-I-N-G [Mozcon 2025 Speaker Series]](https://moz.com/images/blog/banners/Mozcon2025_SpeakerBlogHeader_1180x400_LidiaInfante_London.png?auto=compress,format&fit=crop&dm=1749465874&s=56275e60eb1f4363767c42d318c4ef4a#)

![How To Build AI Tools To Automate Your SEO Workflows [MozCon 2025 Speaker Series]](https://moz.com/images/blog/banners/Mozcon2025_SpeakerBlogHeader_1180x400_Andrew_London-1.png?auto=compress,format&fit=crop&dm=1749642474&s=7897686f91f4e22a1f5191ea07414026#)

![How to Create an SEO Forecast [Free Template Included] — Whiteboard Friday](https://moz.com/images/blog/banners/WBF-SEOForecasting-Blog_Header.png?auto=compress,format&fit=crop&dm=1694010279&s=318ed1d453ed4f230e8e4b50ecee5417#)

![What Are Good Google Ads Benchmarks In 2025? [STUDY] via @sejournal, @brookeosmundson](https://www.searchenginejournal.com/wp-content/uploads/2025/06/benchmark-273.png)

![Snapchat Shares Trend Insights for Marketers to Tap Into This Summer [Infographic]](https://imgproxy.divecdn.com/7LB56F586EcY82vl5r47Ba6f7RdKcHkNelnSgSe8Umc/g:ce/rs:fit:770:435/Z3M6Ly9kaXZlc2l0ZS1zdG9yYWdlL2RpdmVpbWFnZS9zbmFwX2tzYTIucG5n.webp)

![Social media image sizes for all networks [June 2025]](https://blog.hootsuite.com/wp-content/uploads/2023/01/Social-Media-Image-Sizes-2023.png)

![How marketers are navigating a possible recession (and advice about what you should do during it) [new data]](https://www.hubspot.com/hubfs/image12-May-27-2025-02-18-19-8390-AM.png)