Want to Integrate AI into Your Business? Fine-Tuning Won’t Cut It

Machine learning advice from one CEO to anotherImage by author: Max SurkizUntil recently, an “AI business” referred exclusively to companies like OpenAI that developed large language models (LLMs) and related machine learning solutions. Now, any business, often a traditional one, can be considered an “AI business” if it harnesses AI for automation and workflow refinement. But not every company knows where to begin this transition.As a tech startup CEO, my goal is to discuss how you can integrate AI into your business and overcome a major hurdle: customizing a third-party LLM to create a suitable AI solution tailored to your specific needs. As a former CTO who has collaborated with people from many fields, I have set an additional goal of laying it out in a way that non-engineers can easily understand.Integrate AI to streamline your business and customize offeringsSince every business interacts with clients, customer- or partner-facing roles are a universal aspect of commerce. These roles involve handling data, whether you’re selling tires, managing a warehouse, or organizing global travel like I do. Swift and accurate responses are crucial. You must provide the right information quickly, utilizing the most relevant resources both from within your business and your broader market as well. This involves dealing with a vast array of data.This is where AI excels. It remains “on duty” continuously, processing data and making calculations instantaneously. AI embedded in business operations can manifest in different forms, from “visible” AI assistants like talking chatbots (the main focus of this article) to “invisible” ones like the silent filters powering e-commerce websites, including ranking algorithms and recommender systems.Consider the traveltech industry. A customer wants to book a trip to Europe, and they want to know:the best airfare dealsthe ideal travel season for pleasant weathercities that feature museums with Renaissance arthotels that offer vegetarian options and a tennis court nearbyBefore AI, responding to these queries would have involved processing each subquery separately and then cross-referencing the results by hand. Now, with an AI-powered solution, my team and I can address all these requests simultaneously and with lightning speed. This isn’t about my business though: the same holds true for virtually every industry. So, if you want to optimize costs and bolster your performance, switching to AI is inevitable.Fine-tune your AI model to focus on specific commercial needsYou might be wondering, “This sounds great, but how do I integrate AI into my operations?” Fortunately, today’s market offers a variety of commercially available LLMs, depending on your preferences and target region: ChatGPT, Claude, Grok, Gemini, Mistral, ERNIE, and YandexGPT, just to name a few. Once you’ve found one you like — ideally one that’s open-sourced like Llama — the next step is fine-tuning.In a nutshell, fine-tuning is the process of enhancing a pretrained AI model from an upstream provider, such as Meta, for a specific downstream application, i.e., your business. This means taking a model and “adjusting it” to fit more narrowly defined needs. Fine-tuning doesn’t actually add more data; instead, you assign greater “weights” to certain parts of the existing dataset, effectively telling the AI model, “This is important, this isn’t.”Let’s say you’re running a bar and want to create an AI assistant to help bartenders mix cocktails or train new staff. The word “punch” will appear in your raw AI model, but it has several common meanings. However, in your case, “punch” refers specifically to the mixed drink. So, fine-tuning will be instructing your model to ignore references to MMA when it encounters the word “punch.”Implement RAG to make use of the latest dataWith that said, even a well-fine-tuned model isn’t enough, because most businesses need new data on a regular basis. Suppose you’re building an AI assistant for a dentistry practice. During fine-tuning, you explained to the model that “bridge” refers to dental restoration, not civic architecture or the card game. So far, so good. But how do you get your AI assistant to incorporate information that only emerged in a research piece published last week? What you need to do is feed new data into your AI model, a process known as retrieval-augmented generation (RAG).RAG involves taking data from an external source, beyond the pretrained LLM you’re using, and updating your AI solution with this new information. Let’s say you’re creating an AI assistant to aid a user, a professional analyst, in financial consulting or auditing. Your AI chatbot needs to be updated with the latest quarterly statements. This specific, recently released data will be your RAG source.Image by author: Max SurkizIt’s important to note that employing RAG doesn’t eliminate the need for fine-tuning. Indeed, RAG without fine-tuning could work for some Q&A system that relies exclusively on external

Machine learning advice from one CEO to another

Until recently, an “AI business” referred exclusively to companies like OpenAI that developed large language models (LLMs) and related machine learning solutions. Now, any business, often a traditional one, can be considered an “AI business” if it harnesses AI for automation and workflow refinement. But not every company knows where to begin this transition.

As a tech startup CEO, my goal is to discuss how you can integrate AI into your business and overcome a major hurdle: customizing a third-party LLM to create a suitable AI solution tailored to your specific needs. As a former CTO who has collaborated with people from many fields, I have set an additional goal of laying it out in a way that non-engineers can easily understand.

Integrate AI to streamline your business and customize offerings

Since every business interacts with clients, customer- or partner-facing roles are a universal aspect of commerce. These roles involve handling data, whether you’re selling tires, managing a warehouse, or organizing global travel like I do. Swift and accurate responses are crucial. You must provide the right information quickly, utilizing the most relevant resources both from within your business and your broader market as well. This involves dealing with a vast array of data.

This is where AI excels. It remains “on duty” continuously, processing data and making calculations instantaneously. AI embedded in business operations can manifest in different forms, from “visible” AI assistants like talking chatbots (the main focus of this article) to “invisible” ones like the silent filters powering e-commerce websites, including ranking algorithms and recommender systems.

Consider the traveltech industry. A customer wants to book a trip to Europe, and they want to know:

- the best airfare deals

- the ideal travel season for pleasant weather

- cities that feature museums with Renaissance art

- hotels that offer vegetarian options and a tennis court nearby

Before AI, responding to these queries would have involved processing each subquery separately and then cross-referencing the results by hand. Now, with an AI-powered solution, my team and I can address all these requests simultaneously and with lightning speed. This isn’t about my business though: the same holds true for virtually every industry. So, if you want to optimize costs and bolster your performance, switching to AI is inevitable.

Fine-tune your AI model to focus on specific commercial needs

You might be wondering, “This sounds great, but how do I integrate AI into my operations?” Fortunately, today’s market offers a variety of commercially available LLMs, depending on your preferences and target region: ChatGPT, Claude, Grok, Gemini, Mistral, ERNIE, and YandexGPT, just to name a few. Once you’ve found one you like — ideally one that’s open-sourced like Llama — the next step is fine-tuning.

In a nutshell, fine-tuning is the process of enhancing a pretrained AI model from an upstream provider, such as Meta, for a specific downstream application, i.e., your business. This means taking a model and “adjusting it” to fit more narrowly defined needs. Fine-tuning doesn’t actually add more data; instead, you assign greater “weights” to certain parts of the existing dataset, effectively telling the AI model, “This is important, this isn’t.”

Let’s say you’re running a bar and want to create an AI assistant to help bartenders mix cocktails or train new staff. The word “punch” will appear in your raw AI model, but it has several common meanings. However, in your case, “punch” refers specifically to the mixed drink. So, fine-tuning will be instructing your model to ignore references to MMA when it encounters the word “punch.”

Implement RAG to make use of the latest data

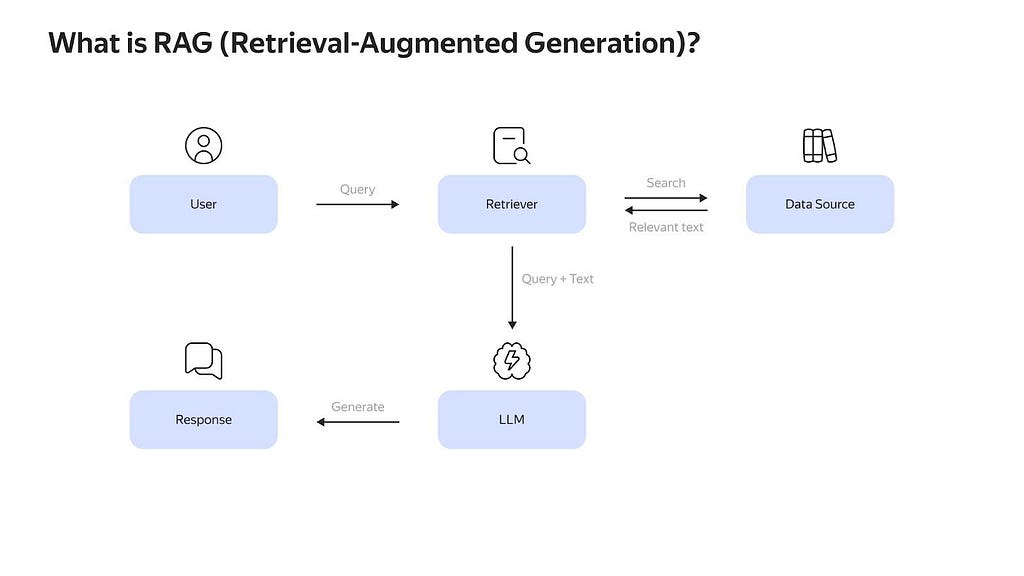

With that said, even a well-fine-tuned model isn’t enough, because most businesses need new data on a regular basis. Suppose you’re building an AI assistant for a dentistry practice. During fine-tuning, you explained to the model that “bridge” refers to dental restoration, not civic architecture or the card game. So far, so good. But how do you get your AI assistant to incorporate information that only emerged in a research piece published last week? What you need to do is feed new data into your AI model, a process known as retrieval-augmented generation (RAG).

RAG involves taking data from an external source, beyond the pretrained LLM you’re using, and updating your AI solution with this new information. Let’s say you’re creating an AI assistant to aid a user, a professional analyst, in financial consulting or auditing. Your AI chatbot needs to be updated with the latest quarterly statements. This specific, recently released data will be your RAG source.

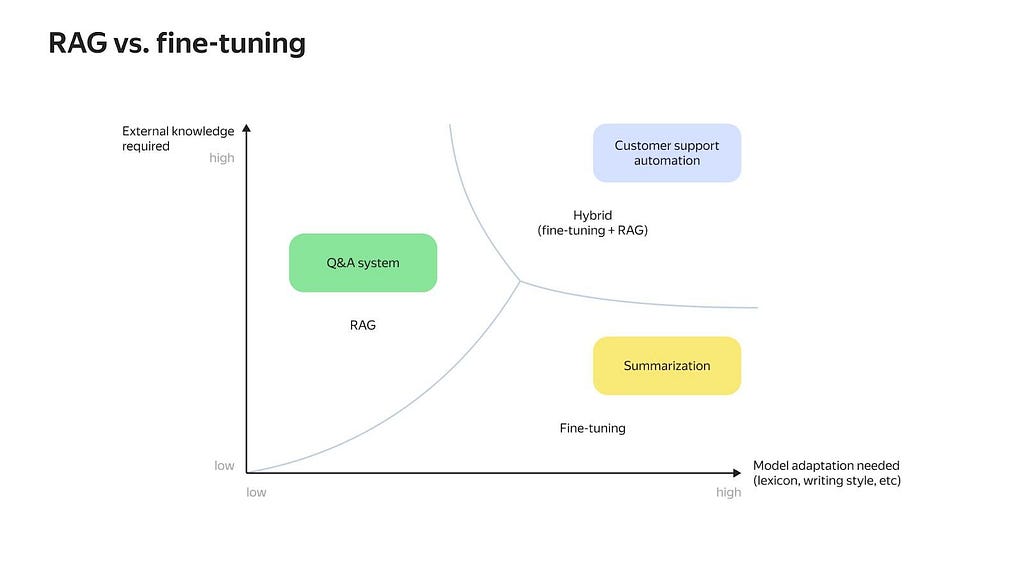

It’s important to note that employing RAG doesn’t eliminate the need for fine-tuning. Indeed, RAG without fine-tuning could work for some Q&A system that relies exclusively on external data, for example an AI chatbot that lists NBA stats from past seasons. On the other hand, a fine-tuned AI chatbot could prove adequate without RAG for tasks like PDF summarization, which are normally a lot less domain-specific. However, in most cases, a customer-facing AI chatbot or a robust AI assistant tailored to your team’s needs will require a combination of both processes.

Move away from vectors for RAG data extraction

The primary challenge for anyone looking to utilize RAG is determining how to prepare their new data source effectively. When a user query is made, your domain-specific AI chatbot retrieves information from the data source. The relevance of this information depends on what sort of data you extracted during preprocessing. So, while RAG will always provide your AI chatbot with external data, the quality of its responses is subject to your planning.

Preparing your external data source means extracting just the right info and not feeding your model redundant or conflicting information that could compromise the AI assistant’s output accuracy. Going back to the fintech example, if you’re interested in parameters like funds invested in overseas projects or monthly payments on derivative contracts, you shouldn’t clutter RAG with unrelated data, such as social security payments.

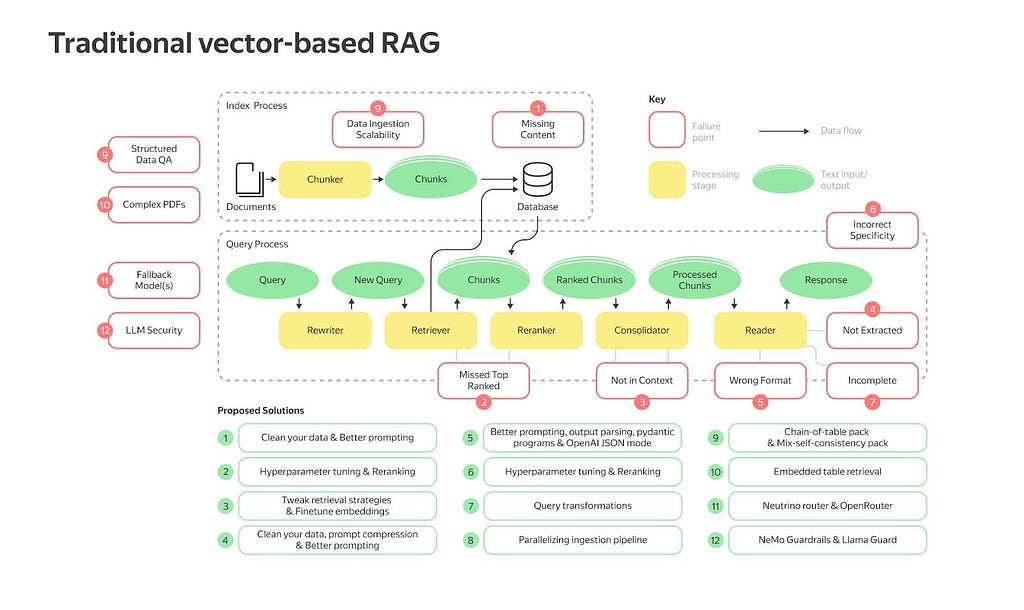

If you ask ML engineers how to achieve this, most are likely to mention “vector” methodology. Although vectors are useful, they have two major drawbacks: the multi-stage process is highly complex, and it ultimately fails to deliver great accuracy.

If you feel confused by the image above, you’re not alone. Being a purely technical, non-linguistic methodology, the vector route attempts to use sophisticated tools to segment large documents into smaller pieces. This often (always) results in a loss of intricate semantic relationships and a diminished grasp of language context.

Suppose you’re involved in the automotive supply chain, and you need specific tire sales figures for the Pacific Northwest. Your data source — the latest industry reports — contain nationwide data. Because of how vectors work, you might end up extracting irrelevant data, such as New England figures. Alternatively, you might end up extracting related but not exactly the right data from your target region, such as hubcap sales. In other words, your extracted data will likely be relevant but imprecise. Your AI assistant’s performance will be affected accordingly when it retrieves this data during user queries, leading to misguided or incomplete responses.

Create knowledge maps for better RAG navigation

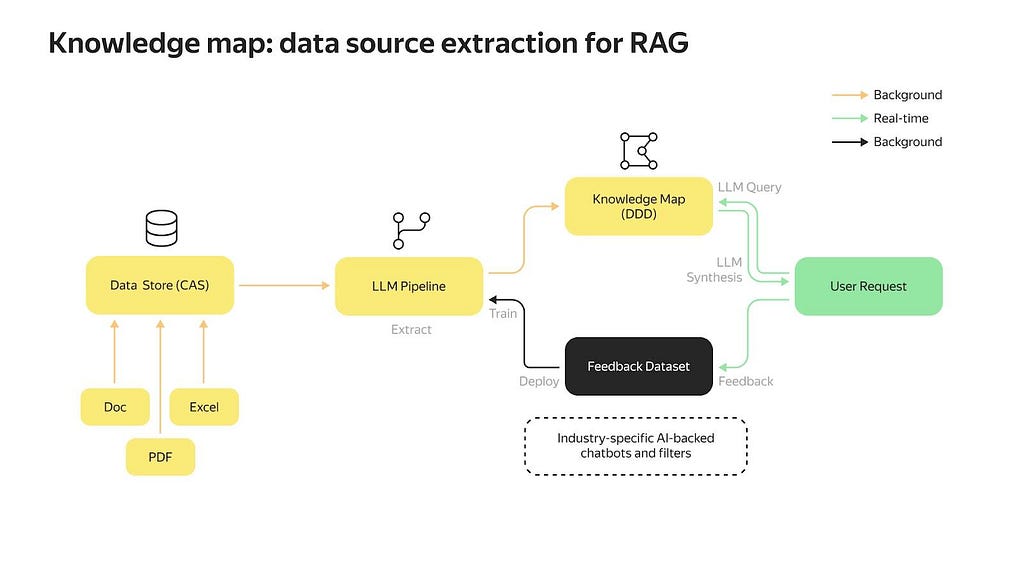

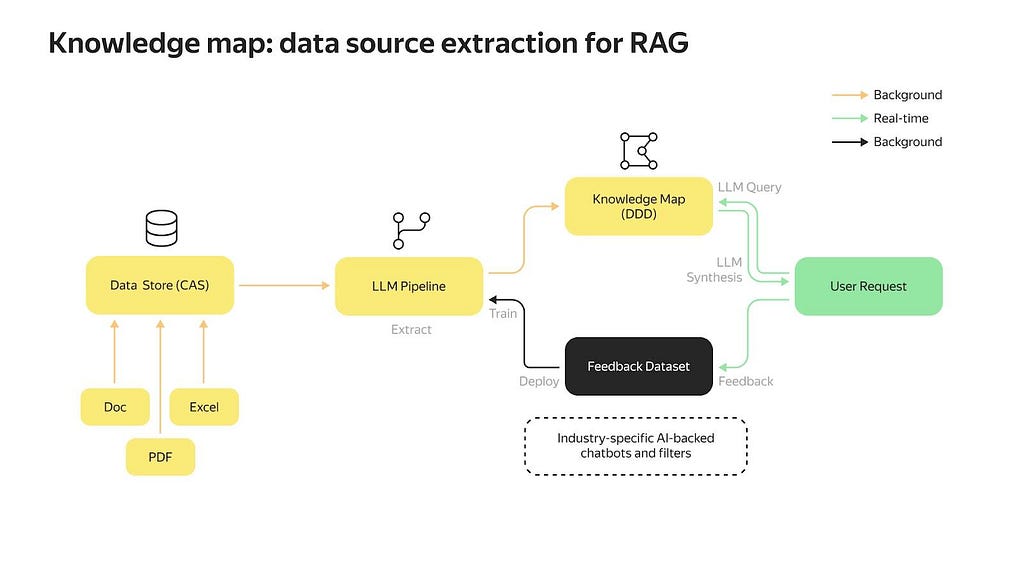

Luckily, there’s now a newer and more straightforward method — knowledge maps — which is already being implemented by reputable tech companies like CLOVA X and Trustbit*. Using knowledge maps reduces RAG contamination during data extraction, resulting in more structured retrieval during live user queries.

A knowledge map for business is similar to a driving map. Just as a detailed map leads to a smoother trip, a knowledge map improves data extraction by charting out all critical information. This is done with the help of domain experts, in-house or external, who are intimately familiar with the specifics of your industry.

Once you’ve developed this “what’s there to know” blueprint of your business landscape, integrating a knowledge map ensures that your updated AI assistant will reference this map when searching for answers. For instance, to prepare an LLM for oil-industry-specific RAG, domain experts could outline the molecular differences between the newest synthetic diesel and traditional petroleum diesel. With this knowledge map, the extraction process for RAG becomes more targeted, enhancing the accuracy and relevance of the Q&A chatbot during real-time data retrieval.

Crucially, unlike vector-based RAG systems that just store data as numbers and can’t learn or adapt, a knowledge map allows for ongoing in-the-loop improvements. Think of it as having a dynamic, editable system that gets better through feedback the more you use it. This is akin to performers who refine their acts based on audience reactions to ensure each show is better than the last. This means your AI system’s capacity will evolve continually as business demands change and new benchmarks are set.

Key takeaway

If your business aims to streamline workflow and optimize processes by leveraging industry-relevant AI, it’s essential to go beyond mere fine-tuning.

As we’ve already seen, with few exceptions, a robust AI assistant, whether it’s serving customers or employees, can’t function effectively without fresh data from RAG. To ensure high-quality data extraction and effective RAG implementation, companies should create domain-specific knowledge maps instead of relying on the more ubiquitous numerical vector databases.

While this article may not answer all your questions, I hope it will steer you in the right direction. I encourage you to discuss these strategies with your teammates to consider further steps.

*How We Build Better Rag Systems With Knowledge Maps, Trustbit, https://www.trustbit.tech/en/wie-wir-mit-knowledge-maps-bessere-rag-systeme-bauen Accessed 1 Nov. 2024

Want to Integrate AI into Your Business? Fine-Tuning Won’t Cut It was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

What's Your Reaction?