Interview with Yuki Mitsufuji: Improving AI image generation

Yuki Mitsufuji is a Lead Research Scientist at Sony AI. Yuki and his team presented two papers at the recent Conference on Neural Information Processing Systems (NeurIPS 2024). These works tackle different aspects of image generation and are entitled: GenWarp: Single Image to Novel Views with Semantic-Preserving Generative Warping and PaGoDA: Progressive Growing of a […]

Yuki Mitsufuji is a Lead Research Scientist at Sony AI. Yuki and his team presented two papers at the recent Conference on Neural Information Processing Systems (NeurIPS 2024). These works tackle different aspects of image generation and are entitled: GenWarp: Single Image to Novel Views with Semantic-Preserving Generative Warping and PaGoDA: Progressive Growing of a One-Step Generator from a Low-Resolution Diffusion Teacher . We caught up with Yuki to find out more about this research.

There are two pieces of research we’d like to ask you about today. Could we start with the GenWarp paper? Could you outline the problem that you were focused on in this work?

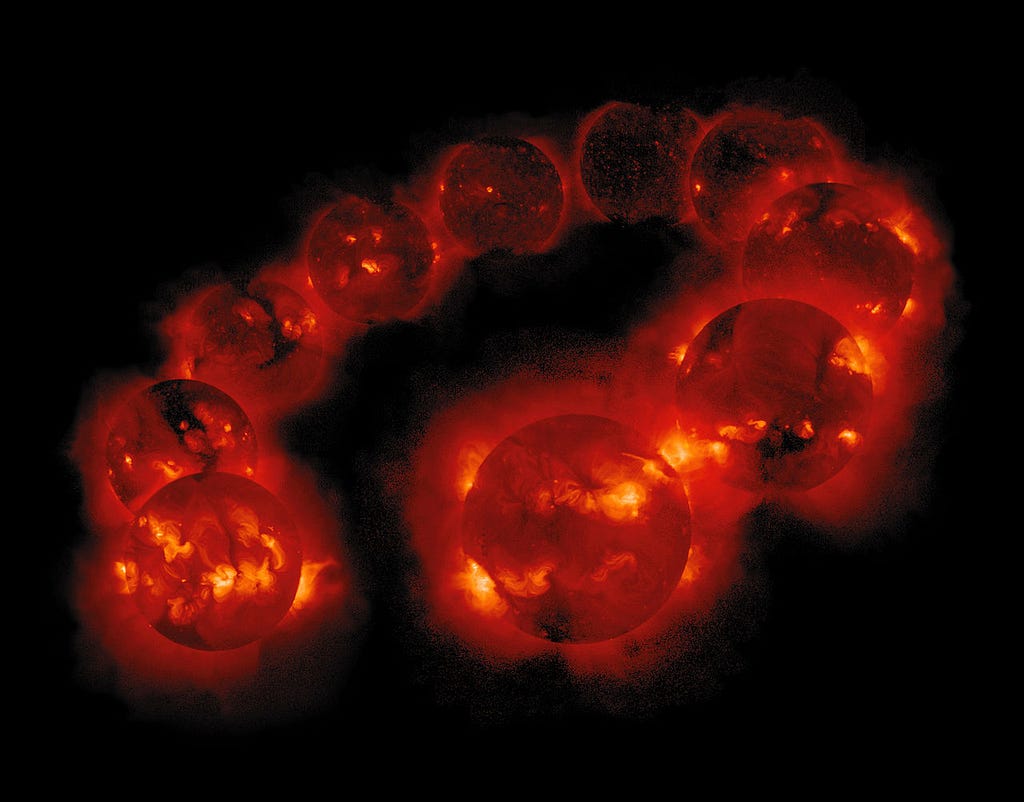

The problem we aimed to solve is called single-shot novel view synthesis, which is where you have one image and want to create another image of the same scene from a different camera angle. There has been a lot of work in this space, but a major challenge remains: when an image angle changes substantially, the image quality degrades significantly. We wanted to be able to generate a new image based on a single given image, as well as improve the quality, even in very challenging angle change settings.

How did you go about solving this problem – what was your methodology?

The existing works in this space tend to take advantage of monocular depth estimation, which means only a single image is used to estimate depth. This depth information enables us to change the angle and change the image according to that angle – we call it “warp.” Of course, there will be some occluded parts in the image, and there will be information missing from the original image on how to create the image from a new angle. Therefore, there is always a second phase where another module can interpolate the occluded region. Because of these two phases, in the existing work in this area, geometrical errors introduced in warping cannot be compensated for in the interpolation phase.

We solve this problem by fusing everything together. We don’t go for a two-phase approach, but do it all at once in a single diffusion model. To preserve the semantic meaning of the image, we created another neural network that can extract the semantic information from a given image as well as monocular depth information. We inject it using a cross-attention mechanism, into the main base diffusion model. Since the warping and interpolation were done in one model, and the occluded part can be reconstructed very well together with the semantic information injected from outside, we saw the overall quality improved. We saw improvements in image quality both subjectively and objectively, using metrics such as FID and PSNR.

Can people see some of the images created using GenWarp?

Yes, we actually have a demo, which consists of two parts. One shows the original image and the other shows the warped images from different angles.

Moving on to the PaGoDA paper, here you were addressing the high computational cost of diffusion models? How did you go about addressing that problem?

Diffusion models are very popular, but it’s well-known that they are very costly for training and inference. We address this issue by proposing PaGoDA, our model which addresses both training efficiency and inference efficiency.

It’s easy to talk about inference efficiency, which directly connects to the speed of generation. Diffusion usually takes a lot of iterative steps towards the final generated output – our goal was to skip these steps so that we could quickly generate an image in just one step. People call it “one-step generation” or “one-step diffusion.” It doesn’t always have to be one step; it could be two or three steps, for example, “few-step diffusion”. Basically, the target is to solve the bottleneck of diffusion, which is a time-consuming, multi-step iterative generation method.

In diffusion models, generating an output is typically a slow process, requiring many iterative steps to produce the final result. A key trend in advancing these models is training a “student model” that distills knowledge from a pre-trained diffusion model. This allows for faster generation—sometimes producing an image in just one step. These are often referred to as distilled diffusion models. Distillation means that, given a teacher (a diffusion model), we use this information to train another one-step efficient model. We call it distillation because we can distill the information from the original model, which has vast knowledge about generating good images.

However, both classic diffusion models and their distilled counterparts are usually tied to a fixed image resolution. This means that if we want a higher-resolution distilled diffusion model capable of one-step generation, we would need to retrain the diffusion model and then distill it again at the desired resolution.

This makes the entire pipeline of training and generation quite tedious. Each time a higher resolution is needed, we have to retrain the diffusion model from scratch and go through the distillation process again, adding significant complexity and time to the workflow.

The uniqueness of PaGoDA is that we train across different resolution models in one system, which allows it to achieve one-step generation, making the workflow much more efficient.

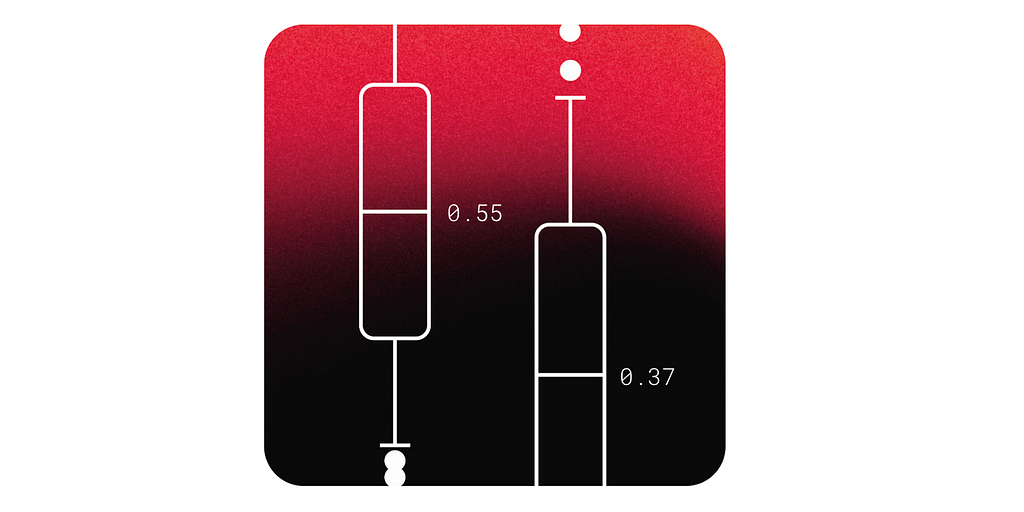

For example, if we want to distill a model for images of 128×128, we can do that. But if we want to do it for another scale, 256×256 let’s say, then we should have the teacher train on 256×256. If we want to extend it even more for higher resolutions, then we need to do this multiple times. This can be very costly, so to avoid this, we use the idea of progressive growing training, which has already been studied in the area of generative adversarial networks (GANs), but not so much in the diffusion space. The idea is, given the teacher diffusion model trained on 64×64, we can distill information and train a one-step model for any resolution. For many resolution cases we can get a state-of-the-art performance using PaGoDA.

Could you give a rough idea of the difference in computational cost between your method and standard diffusion models. What kind of saving do you make?

The idea is very simple – we just skip the iterative steps. It is highly dependent on the diffusion model you use, but a typical standard diffusion model in the past historically used about 1000 steps. And now, modern, well-optimized diffusion models require 79 steps. With our model that goes down to one step, we are looking at it about 80 times faster, in theory. Of course, it all depends on how you implement the system, and if there’s a parallelization mechanism on chips, people can exploit it.

Is there anything else you would like to add about either of the projects?

Ultimately, we want to achieve real-time generation, and not just have this generation be limited to images. Real-time sound generation is an area that we are looking at.

Also, as you can see in the animation demo of GenWarp, the images change rapidly, making it look like an animation. However, the demo was created with many images generated with costly diffusion models offline. If we could achieve high-speed generation, let’s say with PaGoDA, then theoretically, we could create images from any angle on the fly.

Find out more:

- GenWarp: Single Image to Novel Views with Semantic-Preserving Generative Warping, Junyoung Seo, Kazumi Fukuda, Takashi Shibuya, Takuya Narihira, Naoki Murata, Shoukang Hu, Chieh-Hsin Lai, Seungryong Kim, Yuki Mitsufuji.

- GenWarp demo

- PaGoDA: Progressive Growing of a One-Step Generator from a Low-Resolution Diffusion Teacher, Dongjun Kim, Chieh-Hsin Lai, Wei-Hsiang Liao, Yuhta Takida, Naoki Murata, Toshimitsu Uesaka, Yuki Mitsufuji, Stefano Ermon.

About Yuki Mitsufuji

|

Yuki Mitsufuji is a Lead Research Scientist at Sony AI. In addition to his role at Sony AI, he is a Distinguished Engineer for Sony Group Corporation and the Head of Creative AI Lab for Sony R&D. Yuki holds a PhD in Information Science & Technology from the University of Tokyo. His groundbreaking work has made him a pioneer in foundational music and sound work, such as sound separation and other generative models that can be applied to music, sound, and other modalities. |

What's Your Reaction?